🗂️ Portable Data Stack Concept with dbt, DuckDB, Sheets and Looker Studio

We frequently hear about BigQuery or Snowflake as the go-to options in the popular modern data stack, but are they really what you need?

There are lots of shiny objects we encounter that make us forget about simplicity and the principle of “the right tool for the job”.

Most of the time, small startups or even companies with small data volumes fall for these new tools.

In the end, they are simply overkill.

Then, the domino effect starts cascading into:

Expensive Fivetran setups

Huge bills from BigQuery

Numerous dbt models with

SELECT * FROM table;And much more.

Sometimes, the best way to start is less sexy.

📦 The Power of Portability

Over the past few months, I’ve been learning in-depth concepts about Docker and portability.

If you are also interested, you can read:

There are tons of companies overkilling data stacks with huge setups that end up leveraging 1% of those features.

The funny thing is, we are living in amazing times where you can achieve incredible results with tiny but powerful tools.

You can utilize a 'data stack in a box' concept and use it on demand and be able to recreate it without all the painful maintenance when its not needed.

Also, it can help you save tons of unnecessary costs even if you use this kind of approaches on top of your modern data stack tools.

👨🏻💻 Fundamentals: take only what you need

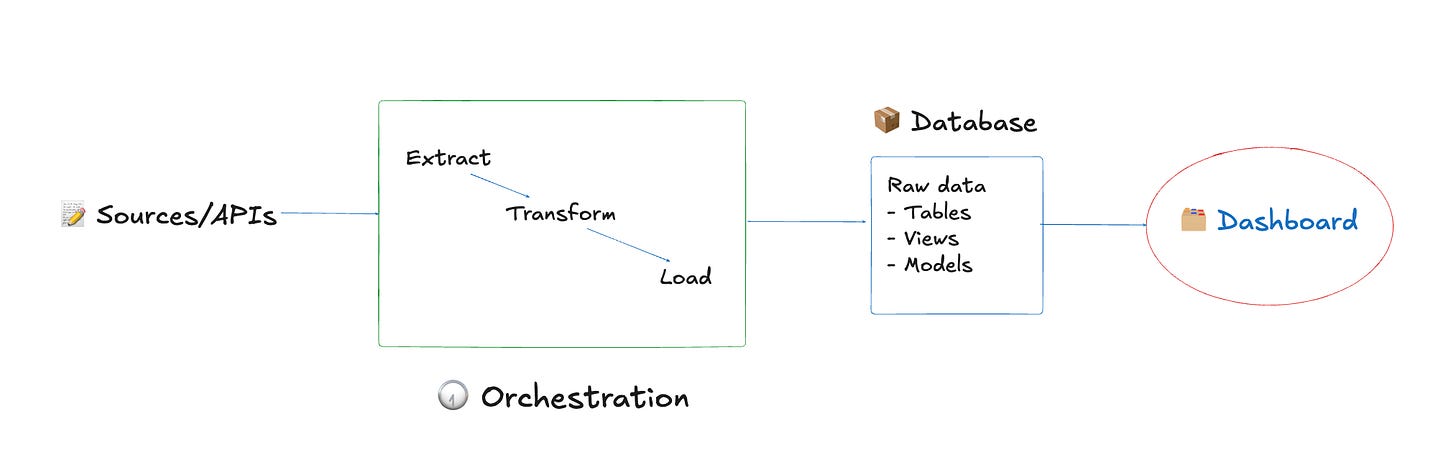

Most of the time, data stacks consist of these pieces:

ETL workflows

Database

Data visualization

Orchestration

This is the most common pattern that you will find if you work in 95% of data teams around the world.

There’s a lot of hype around real-time with Kafka & Flink, big data processing with Spark and open table format with Iceberg or Delta Lake.

But those use cases are exclusive to a subset of companies that have a suitable volume to make it worth it.

At the end of the day, you need to move data from A to B, add some logic, and automate it so you don’t hit the button every day.

That’s it. Don’t kill mosquitos with a bazooka!

🔎 Proof of Concept

To test this concept, I’ve created a repo:

👉 Github Repo Data Stack To Go With DuckDB & dbt

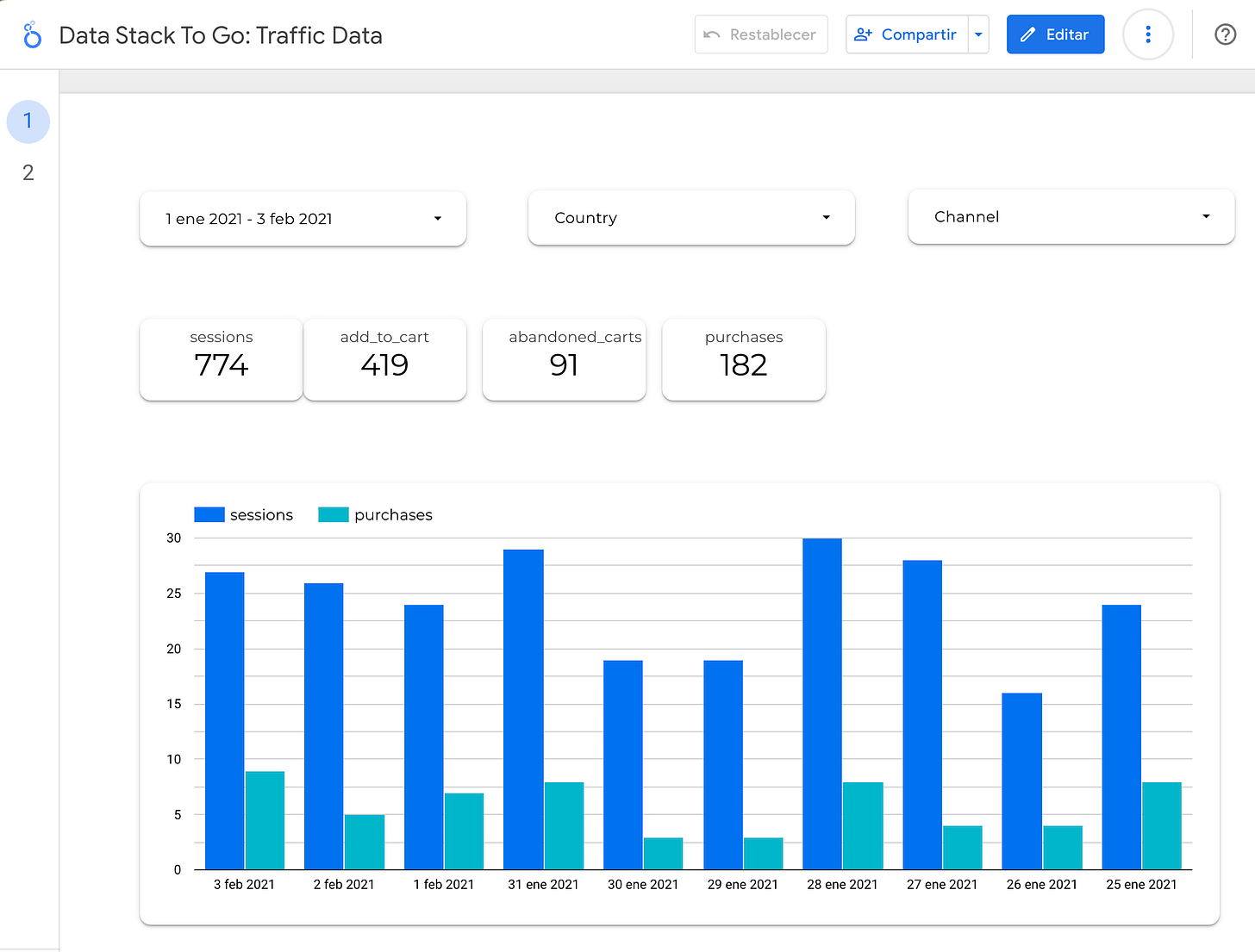

This repo is calling 3 endpoints from PiwikPRO API to get traffic analytics data.

Every day;

Github Actions will build the Docker container on its runner.

Call the APIs to get the data and save it into DuckDB database, all with pure Python.

dbt-core will build the models and run all test validations.

Again, with pure Python, we will get the dbt output in DuckDB and export it to Google Sheets.

Google Sheets is connected to a Looker Studio to build quick and nice dashboard to answer business questions.

In the upcoming sections I will go through each tool used!

🛠️ The right tool for the job

We’ll go through the tools one by one to understand what is the bare minimum that can do a huge difference in your portable stack:

🐍 Pure Python (Extraction and Loading)

Nothing much to say.

No need to learn Airflow decorators or random syntax, just call APIs with pure python code!

🦆 DuckDB (In memory Database)

In this other post, I’ve compared Pandas, Polars and DuckDB based on file types and performance.

What DuckDB can do is beyond comprehension.

You can query millions of rows in a couple of seconds without major complications.

You need to come a really long way before thinking that you need another tool to achieve what you want.

And, the best part is you can work with SQL when using it!

🔂 dbt-core (Transformation)

At the moment, the main character in the whole data space covering the “T” in the ELT.

It’s pretty easy to build data models with custom logic and let the tool connect the dots with sequential jinja templating.

Back in the day, doing this with stored procedures was hell compared to this current approach.

But, options such as SQL-mesh or SDF are starting to compete with interesting solutions.

🕢 Github Actions (Orchestration)

It’s free, it has no learning curve. And it works as a simple CRON.

You don’t need to go with Airflow right away. Forget about it!

📄 Google Sheets (Database)

Data people are formatted to hate GSheets because stakeholders ask them to export reports to work with the data on a spreadsheet.

If you can beat them, join them.

Yes, you will need to become familiar with setting up service accounts to interact with the Google Sheets API, but that’s straightforward with proper documentation.

Besides, really cool things are being cooked around DuckDB and spreadsheets!

📊 Looker Studio (Data Viz)

Make easy dashboards without weird customization and logics.

As you update your Google Sheets, the native connection will do the rest.

Is more than you need to get started, PowerBI or Tableau can wait.

👀 Outro

You need to experiment to find the most suitable tools for your use-case and think in the long-term.

You always need to study the context of your company and be mindful about all scenarios possible.

For example, Google Sheets has a limit of 10M cells at the moment. This number can be retrieved if you multiply rows by columns. That said, if you are worried about it, your data volume might need another solution!

Practice, research, and make the most out of all the tools at your disposal!

However, don’t take shortcuts, as the quickest solution is not always the best.

📝 TL;DR

📦 The Power of Portability

👨🏻💻 Fundamentals: take only what you need

🔎 Proof of Concept

🛠️ The right tool for the job

If you enjoyed the content, hit the like ❤️ button, share, comment, repost, and all those nice things people do when like stuff these days. Glad to know you made it to this part!

Hi, I am Alejandro Aboy. I am currently working as a Data Engineer. I started in digital marketing at 19. I gained experience in website tracking, advertising, and analytics. I also founded my agency. In 2021, I found my passion for data engineering. So, I shifted my career focus, despite lacking a CS degree. I'm now pursuing this path, leveraging my diverse experience and willingness to learn.

Thanks for the insights Alejandro. I am a finance guy trying to understand the technical side of the data I use in Excel everyday. This helps simplify what I am trying to do instead using 10 different advanced tools to get the job done, I can learn a few tools that do 99% of what I need.

Great read, really enjoyed it!

I’ve wrestled with that classic question myself: where do I get free compute for small projects when storage and analytics are already sorted?

Thanks, GitHub Actions (or Gitlab runners) is the good piece of advice.