SQL to dbt: Get Started with Analytics Engineering

There are many data analysts stuck in old ways of using SQL for data analytics when they could be learning the mindset and tools for Analytics Engineering that could change things dramatically.

Hi there! Alejandro here 😊

Suscribe if you like to read about technical data & AI learnings, deep dives!

Enjoy the reading and let me know in the comments what you think about it 👨🏻💻

SQL was my first analytics tool, just like for many data pros.

I wrote custom SQL queries in Looker Studio, created aggregations, and debugged 1000-line SQL queries someone else had over-engineered—then got excited when I discovered cool features like WINDOW FUNCTIONS.

But SQL also caused some headaches.

Scheduled queries (also called stored procedures) are a pain. They often contain long blocks of repeated code, changed only by a CASE WHEN condition.

There was no clear documentation explaining what each TABLE or VIEW did or what the column metadata meant. In the best-case scenario, some good soul was adding a TABLE description that you could check to have some context.

When errors happened, we played "guess which script broke the pipeline" or wondered if a colleague's latest changes had ruined our carefully crafted transformations.

Developers fighting custom requests often apply patches "here and there," which result in Frankensteins scattered all over the place. This is not a SQL thing per se, but it's usually how SQL creates technical debt.

Because of all this, dbt can become your new best friend.

⚠️ Disclaimer: I've used dbt for over three years and haven't explored other options like SDF (acquired by dbt), SQLMesh, or Dataform deeply enough to compare. Saying good things about dbt doesn't mean the others aren't good. I encourage you to try them yourself!

Let's get started.

🎯 You Already Know 90% Of What You Need

dbt isn't a new language. It's SQL with "superpowers." You keep using SQL but within a context where everything is properly connected to enhance logic transformations chains across all your data layers.

Whether you use PostgreSQL, BigQuery, Snowflake, or Redshift, the transition is smooth.

You'll learn skills like Jinja templating, dbt commands, and data modeling techniques. These will help you get the most out of moving from plain SQL to dbt.

At its core, dbt runs dependent SQL queries in a logical order. It sounds simple, yet it took a long time for this to appear in the market.

dbt transforms data to make it ready for analytics.

dbt handles the T in ETL (Extract, Transform, Load). Many papers credit dbt as a key reason for the shift from ETL to ELT, where transformations happen after loading.

🔍 SQL Vs. dbt: A Deep Dive On Queries

Your current SQL might look like this:

-- some_important_analysis.sql

SELECT customer_id,

SUM(order_amount) as total_spent

FROM (

SELECT table_1.column1,

table_b.column2,

table_a.column3,...

FROM table_a

JOIN table_b

ON table_a.id = table_b.id

) as raw_orders

WHERE order_date >= '2024-01-01'

GROUP BY customer_id; In dbt, the same logic becomes:

-- models/marts/customer_summary.sql

SELECT customer_id,

SUM(order_amount) as total_spent

FROM {{ ref('staging_orders') }}

WHERE order_date >= '2024-01-01'

GROUP BY customer_id The {{ ref('staging_orders') }} handles dependency management for you.

In the first example, raw_orders is a VIEW in your database with some logic, probably created by other analyses or backend processes.

dbt compiles SQL cleanly, making your code easy to trace and ready for new team members.

⚔️ What Concepts Does dbt Bring To The Table?

The biggest improvements are modularity, dependency management, testing, version control, and Jinja templating.

🧘 Modularity: Break The Problem Into Pieces

Old way: SQL files scattered across your database. You open a schema and see a list of TABLEs and VIEWs with no context or order. Good luck finding anything without debug mode.

dbt way: Everything lives in a structured project with organized folders. The only risk is messy projects where people ignore clean structure and put files anywhere.

🆘 No More "Table Not Found" Panic Attacks

dbt adds the ref() macro, which builds a dependency graph.

dbt runs everything in the right order, every time.

Remember that earlier code? The ref() macro makes sure everything compiles cleanly and runs smoothly across your project.

You might write a 500-line file, but with ref(), it looks like a 50-line file.

With AI-powered IDEs like Cursor, you can add rules to scan dependencies faster. This speeds up understanding projects when making precise changes.

✅ Fast Testing And Deployment

dbt has built-in tests to catch nulls, duplicates, and broken relationships before production.

You can even come up with your own custom checks to test edge cases for your particular business needs.

You can run these tests during development and deployment to keep everything on track.

⚒️ Version Control With Git

One big problem in database development is tracking changes over time. Without version control, tracing issues is hard.

dbt solves this by being repository-driven, so you know exactly when changes happened and rollback without all the struggle of figuring out what happened when.

🧙 Macros With Jinja Templating

This is probably the only new concept you need to learn, and it's intuitive enough for you to follow.

Jinja lets you write dynamic SQL that adapts based on conditions, variables, and logic.

These are dbt macros ways to reuse code without rewriting it.

If you need variables or dynamic logic, you set them inside a macro. The code then behaves as you want without complex edge-case handling.

For example:

{% set important_date = '2024-01-01' %}

SELECT *

FROM {{ ref('orders') }}

WHERE order_date >= '{{ important_date }}'

{% if var('include_refunds', false) %}

AND status != 'refunded'

{% endif %} At runtime, this compiles to:

SELECT *

FROM (

SELECT columns

FROM orders

)

WHERE order_date >= '2024-01-01' Why? important_date is a static value. include_refunds defaults to false, so the extra status is not considered during compilation.

The beauty is in the simplicity: you're still writing SQL, just with some template magic.

Imagine a 20-condition CASE WHEN using Jinja templates. Amazing!

What dbt isn’t

There's a common idea that dbt = Analytics Engineering, but that's not quite right.

Yes, dbt is mostly used by that role, but it's also a tool for data modeling and that doesn't mean dbt equals data modeling.

I explain why having a strong cross-functional skill set is valuable for your data career in this one in case you are interested:

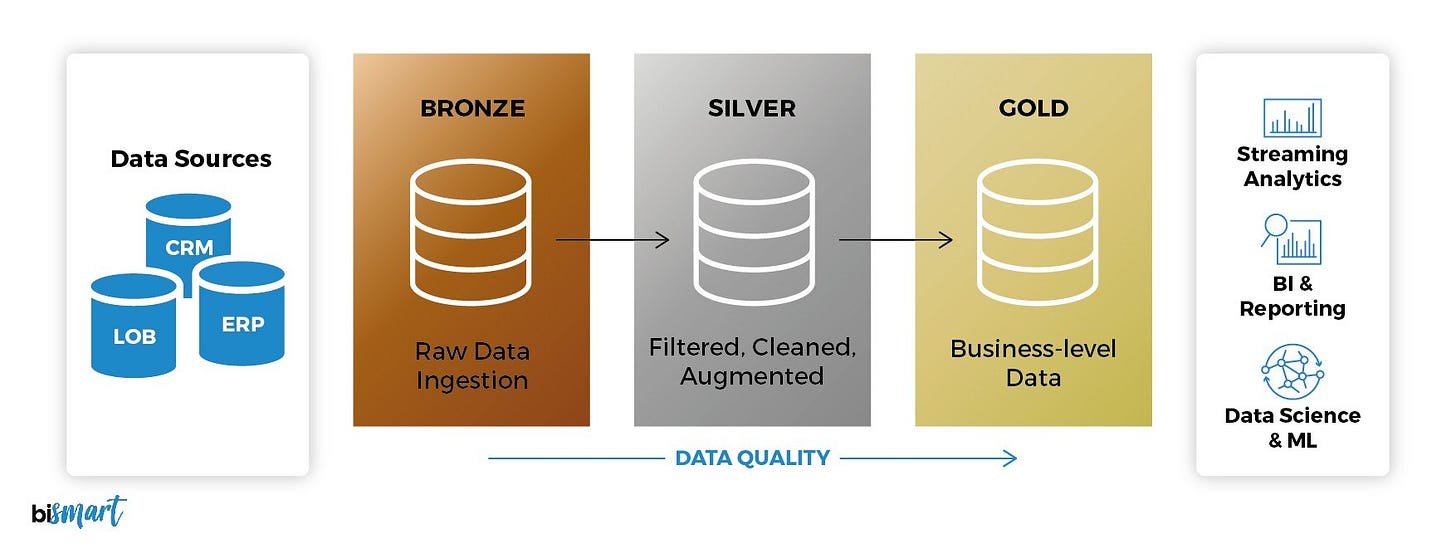

🥇 The Medallion Architecture

If you've worked with data modeling, you know concepts like normalization, denormalization, star schema, dimensions, and facts. These are clean ways to design database schemas with business in mind.

This post isn't about data modeling, but I'll show how dbt helps improve it using medallion architecture principles.

This architecture breaks down into Bronze, Silver, and Gold layers. dbt usually calls these staging, intermediate, and marts/facts:

🥉 Bronze Layer (Raw Ingestion - Staging)

Use source() to reference raw tables.

FROM {{ source('raw_system', 'customer_data') }} as stg_customers 🥈 Silver Layer (Cleaned & Standardized - Intermediate)

Clean and standardize data in staging models.

SELECT UPPER(TRIM(customer_name)) as customer_name,

PARSE_DATE('%Y-%m-%d', date_string) as clean_date

FROM {{ ref('stg_customers') }} as int_customers 🥇 Gold Layer (Business Ready Analytics - Marts/Facts)

Aggregate data for business use.

SELECT region,

SUM(revenue) as total_revenue

FROM {{ ref('int_customer') }}

LEFT JOIN {{ ref('int_sales') }}

ON int_customer.customer_id = int_sales.customer_id

GROUP BY region🚀 My Learning Path Recommendation To Become An Analytics Engineer

Take the dbt fundamentals course. It's free and easy to follow.

Find a real project and slowly implement dbt layers: source, staging, intermediate, and so on.

Remember common issues and use built-in or custom dbt tests to catch them before production.

Identify repeated patterns and convert them into macros.

Plan this as a 3 month journey, depending on your workload.

Work with real data. Sample projects are good to start but can leave you stuck if you don't try real scenarios.

Learning dbt doesn't just make you more relevant.

It transforms you from a data analyst into an analyst on steroids or a data engineer with strong analytical skills (or Analytics Engineer).

But being Analytics Engineer is not dependant on knowing dbt, it’s about transforming business logic into analytics ready assets with the right mix of data modeling and technology.

You become someone who can build production level data pipelines, create self-documenting data models and scale analytics across entire organizations.

📝 TL;DR

• dbt is 90% SQL you already know, plus 10% templating magic

• ref() and source() replace hard-coded table names and manage dependencies

• Use Jinja templates to add variables and logic to your SQL without losing sanity

• Catch data quality issues before they reach production

• Use the Medallion Architecture for clean, self-documenting data pipelines

• Transform from a "SQL analyst" into an Analytics Engineer

If you enjoyed the content, hit the like ❤️ button, share, comment, repost, and all those nice things people do when like stuff these days. Glad to know you made it to this part!

Hi, I am Alejandro Aboy. I am currently working as a Data Engineer. I started in digital marketing at 19. I gained experience in website tracking, advertising, and analytics. I also founded my agency. In 2021, I found my passion for data engineering. So, I shifted my career focus, despite lacking a CS degree. I'm now pursuing this path, leveraging my diverse experience and willingness to learn.